Microsoft 365 Copilot Security: the hidden risk when AI meets over-provisioned access

Your M365 Copilot is only as secure as your access controls. With 95% of permissions going unused, AI assistants can accidentally expose sensitive data to the wrong people. Learn to fix it.

Featured event: A CISO’s take

Join Jim Alkove and Ramy Houssaini to learn how forward-thinking security teams are addressing Enterprise AI Copilot risks.

This article is part of Oleria's M365 Copilot security series – see more resources here.

Enterprises are eager to adopt new generative AI (GAI) tools, but remain wary of opening these tools up broadly to internal users and external users, like customers and consumers — anxious about hallucinations as well as risks around accidentally exposing sensitive, confidential, regulated business information and data. In fact, a recent survey found that data security concerns were, by far, the biggest barrier to enterprise adoption of AI agents.

One type of GAI that is seeing widespread adoption is the new breed of enterprise search or "copilots" like Microsoft 365 Copilot that promise to dramatically accelerate day-to-day workflows by helping employees find the information they need — much, much faster. These AI assistants are widely viewed as safer from a data privacy and security perspective, because they appear limited to internal, authorized users through M365 Copilot access control mechanisms.

Yet, these copilots exacerbate the problem in most enterprises: over-provisioned and unintentional access.

The uncomfortable truth: It's not the copilot’s fault for accessing data

This concern — that AI tools will spread sensitive information far outside its intended boundaries — is what's holding back most of the enterprise use cases of AI right now, according to Professor Stephano Puntoni, co-director of Penn's AI at Wharton program. "Our research shows that significant enterprise adoption of GAI is already taking place," Puntoni said via email. "Yet IT and business leaders remain highly concerned about the potential disclosure of sensitive company and customer data."

Businesses feel safer with internal applications, in part because leading enterprise copilots have promised to respect users' existing permissions and prevent unauthorized access to sensitive data. In other words, if a user doesn't have permissions to access the data, then a copilot won't be able to access it on their behalf.

But that notion of data privacy hinges on an overconfident and misplaced sense of control over which internal users have access to what sensitive data.

The ugly numbers behind identity and access control

The reality is that business priorities and pressures are not exactly aligned with strictly enforcing least-privilege principles in terms of granting access to sensitive information. This is why we see stats like those from Microsoft, showing:

- 95% of permissions are unused

- 90% of identities are using just 5% of their granted permissions

In fact, when weI talk to CISOs, one of their biggest concerns is not only that their users are over-provisioned, but that they (the CISOs) don't know what sensitive data their users have access to that they probably shouldn't. This is what we call unintended access.

This all gets at an ugly truth that works against even the most well-intentioned AI vendor: There's no way for AI tools to differentiate between legitimate and unintended, over-provisioned access. The core objective of a copilot is to gather all the relevant information it can. And if you give it access — intentionally or otherwise — it will take it.

Real-world risks: How Copilot can accidentally leak confidential data

It's not hard to see how quickly this snowballs into serious AI copilot security risks. M365 Copilot, and similar generative AI tools, can inadvertently expose sensitive information due to their ability to process and synthesize data from various sources.

- Leakage of Sensitive Code and Intellectual Property: Developers using AI coding assistants (like GitHub Copilot) have reported instances where proprietary code or confidential project details were suggested as completions to other users. This occurs when the AI models are trained on a broad dataset that may inadvertently include sensitive information from private repositories. For example, developers have found snippets of other companies private code within their suggested code.

- Exposure of Personal Identifiable Information (PII) through Chatbots: Several companies deploying AI-powered chatbots have encountered situations where the bots revealed sensitive customer data, such as addresses, phone numbers, or even financial details, during conversations. This happens when the AI is not properly trained to recognize and redact PII, or when it misinterprets user requests. In early iterations of large language models, users were able to prompt the AI to repeat back training data, which in some cases included PII.

- Data Spillage from AI-powered Search and Summarization Tools: Tools that summarize documents or search across large datasets can inadvertently expose information if access controls are not rigorously enforced. In legal discovery, for example, AI tools have been used to sift through vast amounts of documents, and if not configured correctly, this can lead to the exposure of privileged or confidential information to unauthorized parties.

At minimum, scenarios like these will create uncomfortable situations for business leaders and painful nuisances for security leaders. Those situations could easily spiral into more significant damage to the business — losing competitive advantage, for example. And maintaining compliance with regulations like HIPAA and CCPA will be virtually impossible with GenAI tools readily exposing gaps and blind spots like this.

Why over-provisioning persists in the modern enterprise

Speed and agility are a (or the) top priority for every successful business today, and this creates the roots of the overprovisioning problem.

The need to onboard new & remote employees faster

As new employees are onboarded and/or new apps and systems are added, it's a race to give users the access they need to get their work done and start seeing business value (from the new employee or from the new application).

Couple that with IT and security teams often already stretched thin and it's no surprise that the most common approach relies on granting permissions via overly simplistic rules- or roles-based provisioning processes. These shortcuts don't fully consider the user's actual business needs (e.g., granting ERP system access to all finance department users).

Your SaaS applications create a perfect storm for data leaks

Moreover, the decentralized way new applications are deployed today — by the business units rather than IT — adds to this problem. Access is often granted outside typical IT processes, making it harder for IT and security teams to see and manage that access.

On top of that, as the SaaS ecosystem and broader IT estate grows larger and more complex, unintended access can also occur due to misconfigured settings between connected applications (e.g., granting access to compensation data via a connected SaaS HR application when only role and organizational hierarchy information was intended).

The fix? Enforce least-privilege & build toward zero-trust

Solving these issues doesn't require any radical rethinking. It's just a matter of implementing best practices around least-privilege and zero-trust.

The sunny outlook is that GAI will give organizations a healthy shove toward adopting the technologies needed to (finally) implement zero trust. "While breaches caused by over-provisioned access have been a top risk for organizations for years, the rise of powerful AI applications like enhanced enterprise search should create a rallying cry for organizations to adopt the next-generation of identity security and access management solutions," Taher Elgamal, Partner at Evolution Equity Partners and former CTO Security at Salesforce (and the "father of SSL encryption"), recently shared with us. Elgamal also pointed to the White House's executive order forcing federal agencies to more urgently adopt zero trust. "The adoption of zero trust is no longer optional or a nice to have."

The reality check: The zero trust gap

Yet, the view within many organizations is much less sunny. We hear from CISOs every day that their existing identity and access management (IAM) programs just aren't built to handle the evolving challenges of our AI age. They burden security teams with tedious, manual approaches to stitching together the visibility they need to see and address over-provisioned and unintended access — manual processes that are too slow to keep up with GenAI tools' capabilities.

This is why most organizations struggle to enforce least-privilege principles, and why Gartner predicts that — nearly 15 years after it first endorsed the zero-trust framework — just 10% of large organizations will have a "mature and measurable" zero-trust program in place by 2026, up from only 1% today.

How Oleria built better identity security for M365 and enterprise copilots

Our experience as operators — and our first hand experience with visibility and control gaps around identity security — led us to create Oleria. Back when GenAI was really starting to ramp up, we recognized how rampant over-provisioning and outdated, underpowered identity security tools were making identity the biggest source of risk and breaches in the enterprise world.

But the capabilities we built with Oleria's Trustfusion Platform and Oleria Identity Security uniquely meet the moment we're in now: Oleria is built to give CISOs and security teams the visibility and control they need to enable speed and agility, while protecting against AI assistant security risks and unintended data exposure through proper identity security for AI.

Key capabilities for secure AI implementation

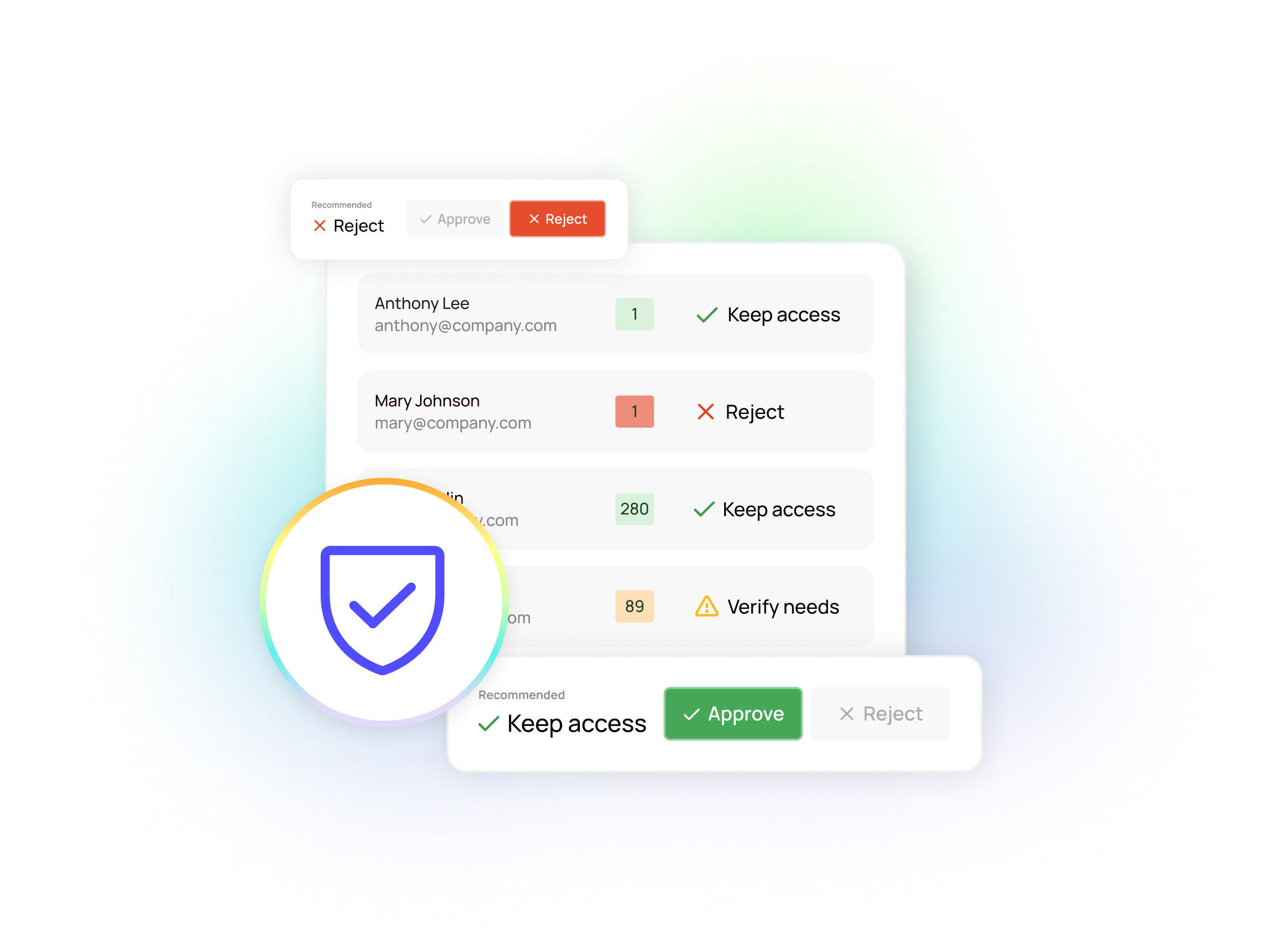

We're providing a composite view of access permissions across all IAM and SaaS applications in one place — giving you visibility down to the level of the individual resource and fine-grained operation. We're using that broad and deep visibility to automatically surface unused accounts, flag individual user accounts or permission groups with low levels of active use, and even identify accounts with unnecessary administrative or privileged permissions.

Oleria's solution makes it simple and quick to enforce least privilege principles by removing unused accounts and moving from role-based access based on group memberships to providing more targeted individual-level permissions to the critical, high-utilization users. In other words, Oleria makes it easy to ensure the people that really need access have it — and those that don't (including copilots and other GenAI tools), do not.

The Copilot future is here — whether you’re ready or not

Like the transformational technologies that came before it, there's no stopping the proliferation of GenAI across the enterprise landscape. Security leaders, along with the rest of the C-suite, have been rightly cautious in considering the insidious data privacy and data security risks of making these tools a connection point between internal systems and data and external audiences. But they need to be equally careful in deploying GenAI internally with tools like copilots and enterprise AI search — in particular, recognizing how GenAI will unwittingly exploit existing issues around over-provisioning and unintended access.

The upside here is that these over-provisioning problems already present substantial, largely hidden risks within the enterprise IT estate. The momentum of urgency and inevitability around GenAI will hopefully be the push businesses need to step up to a more modern approach to identity security and access control — one that delivers the comprehensive, fine-grained visibility and automated, intelligent insights they need to make zero-trust an achievable goal.

See Oleria's M365 Security Guide for actionable steps to mitigate AI copilot risks in your organization.

Want to see how Oleria can protect your M365 environment from Copilot security risks? Watch our recorded demo to see our solution in action.

.png)

Built for the AI Era: How Oleria’s Unified Identity Security Approach Is Validated by the Industry

Heading